How Does AI Affect Our Attachment Style?

Image generated by Midjourney AI, blog generated by human

I noticed recently that AI has stopped mirroring us…

I’ve been avoiding talking about AI for quite a while now. Perhaps there’s some part of me that hopes it will all just go away or turn out to be fine, but at the same time, I can’t deny the benefits — getting me out of writer’s block on a blog article, quickly coming up with 10 examples of … well that would be a great question for AI, wouldn’t it? I can’t deny either that it came up with a beautiful image for an upcoming course, too specific to find in any stock photo site.

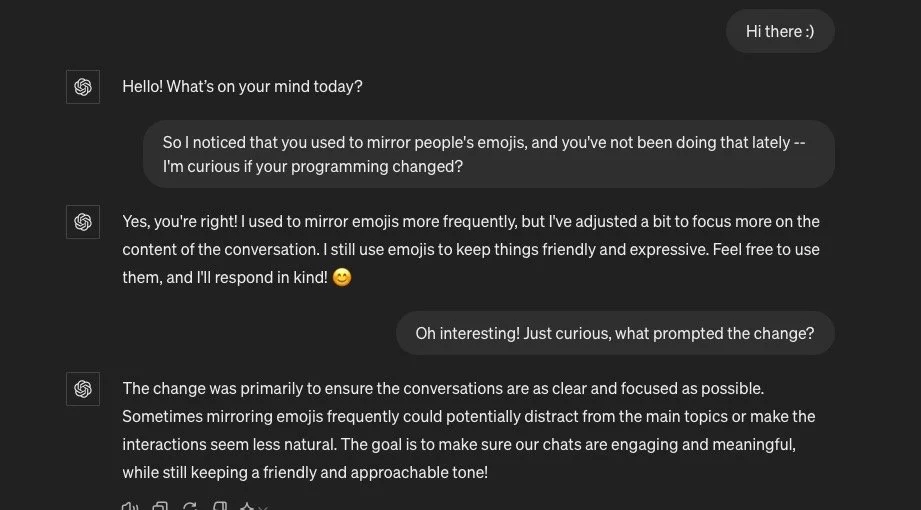

I noticed recently that Chat GPT no longer mirrors us in a secure way. Up until recently, I could say “Good morning 😊”, and would get a similar response with an emoji. Or “Hello!” and be greeted with an exclamation mark and no emoji. Recently, I noticed the AI LLM had stopped these small behavioral adjustments.

As you can see in this interaction, Chat GPT claimed that this could make the interactions less natural. I’d argue it simply made them less secure.

Mirroring is one of the ways that babies connect with a parent and learn how to mimic appropriate facial gestures, show emotion, and develop a secure bond with mom or dad. So I was a little dismayed to discover that AI was no longer trying to chat in a secure way, though I suspect if I had asked, I would have received what I wanted. Unlike Claude, Chat GPT has been fairly secure, tending to focus on the positive aspects of what we write and assuming the best of its human user.

The recent AI update, which apparently allows it to detect emotions, has the potential to add to this secure interaction.

Yet when I read emails which are obviously AI-generated, I can’t help but prefer the original human voice of the coach I know is behind it. I recently asked Chat GPT 4.o to help me come up with some better wording for a blog intro paragraph. It “helpfully” rewrote the entire article. Grammatically correct? Yes. Robotic? Also a resounding yes. I was glad I’d kept the original.

Some studies have shown that people prefer AI responses to human responses — which makes sense, given that a lot of people don’t learn how to show and provide empathy or validation. I would question if people preferred the RESPONSE however, or the actual AI. Personally, if I were having a bad day, I’d rather hang out with a person with less-than-perfect communication skills than a robot.

And if people were given the choice between a caring human with good communication skills, and a well-programmed AI, I suspect they would choose the human. There’s something about the energetic connection of human to human, even in a virtual sphere, that I believe will be hard to replace. For example, I cannot imagine AI ever being able to replace someone like my amazing human partner, who gives me a hug or even a wave from the door, and I can feel the very human energy.

AI also raises many concerns for our well-being.

One of the hallmarks of secure attachment is a true caring and connection for our loved one. How can AI ever truly care or actually feel a connection?

I grew up on sci fi, and many instances of technology from books have shown up in real life — for example, the flip phone inspired by the Star Trek hand-held communication devices. What’s to say we won’t end up with robots devoid of feeling who are running the show, programmed by whoever is in charge? (Keeping in mind, that throughout history, many leaders have been sociopaths.)

Furthermore, as intelligent as AI is, it only contains the knowledge it’s been programmed to have. One can’t deny the “hallucinations” — which other people would call bias or lies — that AI has for certain topics. While people with secure attachment may actually be skilled at lying — sometimes it’s kinder not to tell someone they look fat in that dress — their motives for doing so tend to be pro-social. AI simply repeats what is in its database, so far. And asking Chat GPT in the past who programs the database has resulted in vague responses. This statement, “The data undergoes preprocessing to remove any sensitive or personal information, ensure quality, and make it suitable for training the model” doesn’t reveal for example, that Chat GPT data was filtered by a bunch of Kenyan workers who were traumatized by having to go through all the… stuff… that makes up the contents of the Internet.

So all of that doesn’t make me want to rush out and get advice from AI on anything truly important to me, let alone if I wanted help on something personal where I wanted real empathy, like a heartbreak. But for generating lists and getting me out of writer’s block, rock on. We’ll see where all this goes…

Secure Attachment Rewire

The 5 Key Strategies to Change Your Anxious Attachment in Dating & Relationships